In this blog series, we will take a deeper dive into the work we have previously done. In the first part of the series, we want to showcase a project that we worked on with Suomen Ympäristökeskus (SYKE [1]) in 2018–2019.

A few years back we worked with The Finnish Environment Center (SYKE) on a project to monitor the Finnish lakes and nature. The purpose of this project, called ÄlyVesi, was to test the limits of AI technologies in automatization of tasks in nature. The project included multiple subproblems, the first of which we will introduce in this post.

Measuring water levels

Water level measurements are one of the standard ways to monitor the state of our waters.

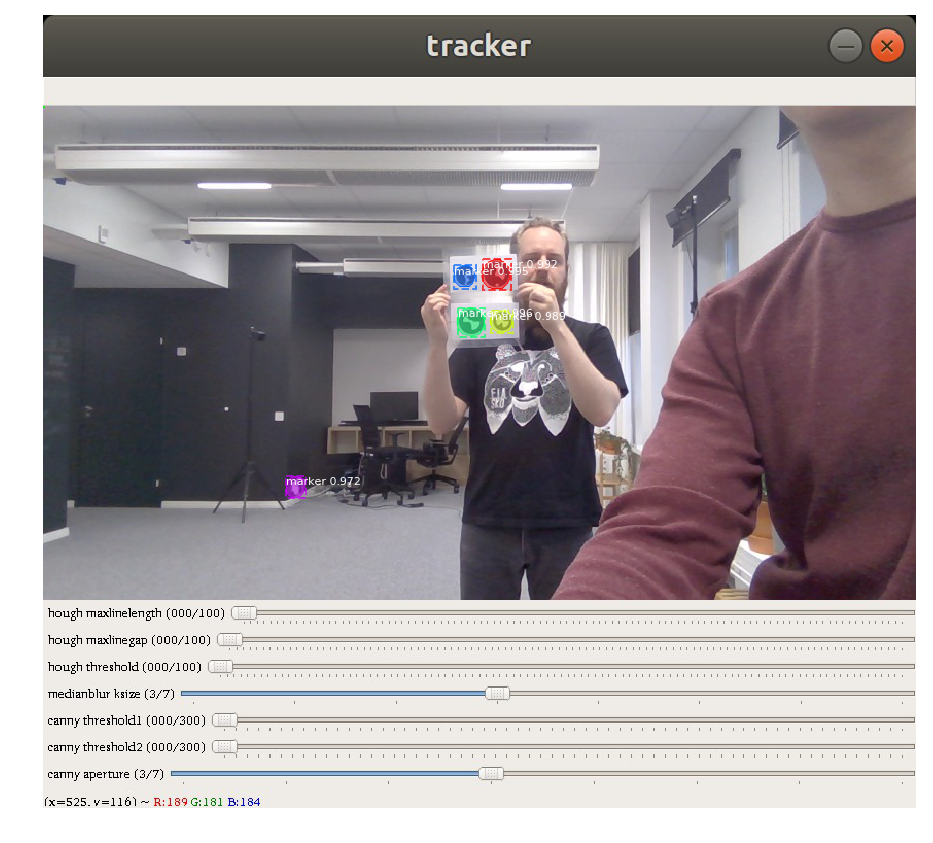

The task was to utilize trail cameras, which were pointed towards water height scales on official measurement stations. The cameras would upload images every hour, and upload them into the cloud for our system to decipher them. These cameras would be installed at arbitrary posts, pointing at the water scale in various angles and illumination conditions.

The solution

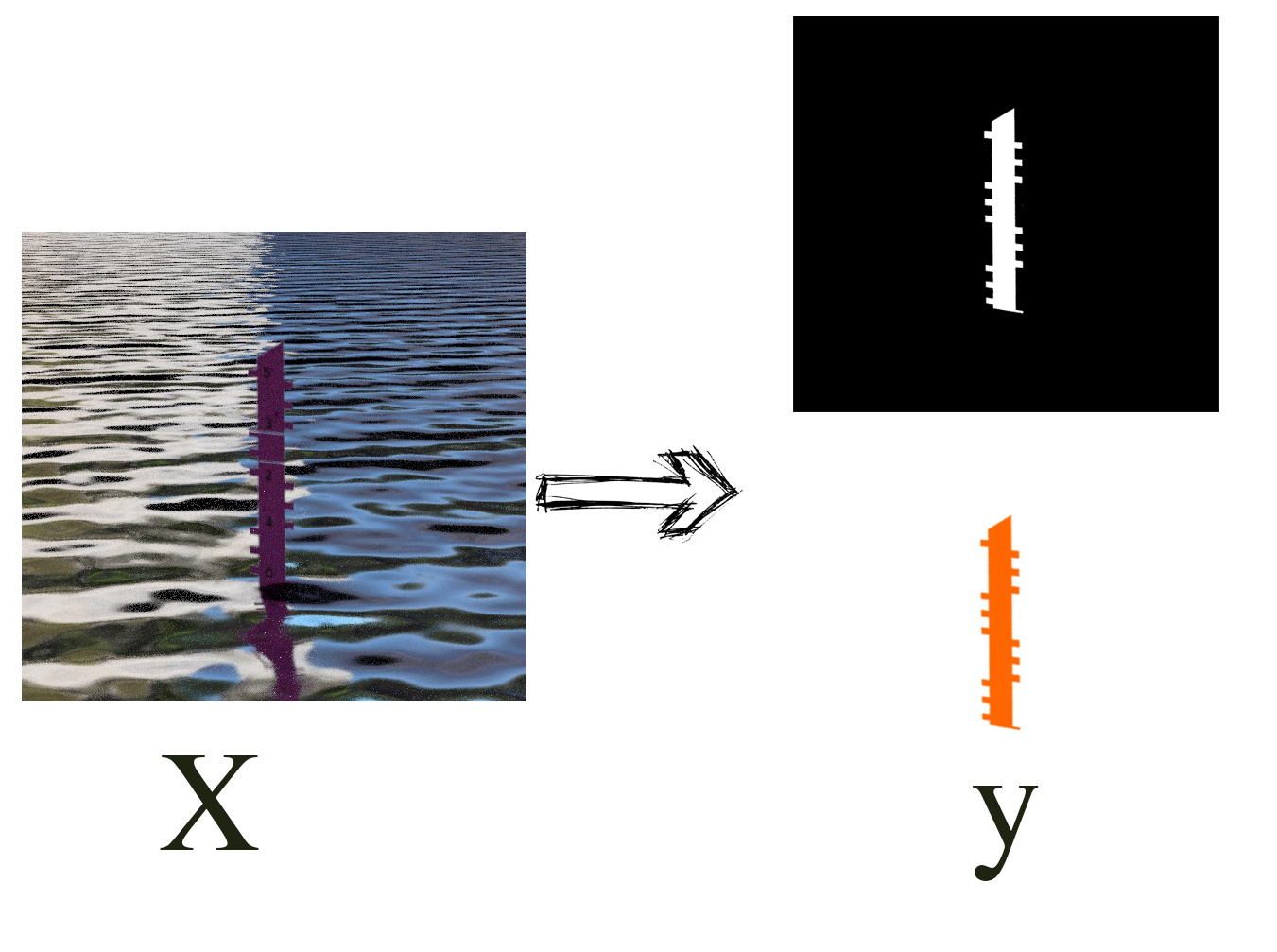

Due to the dynamic needs of the environment, we decided to use robust segmentation methods to first detect the water scale, cut the measurement at the water level, and finally link the pixel height to the real world centimeter height. Initially, we thought we would also detect the real world height from the scale's numbers. Unfortunately, it soon became evident that the currently installed placards only showed the centimeters, but not the meters, so we would have to make further installations to the scales anyway. So why not go all the way then, and solve the problem of skew at the same time?

Enter our custom-crafted fiducials – or pizzas – as we called them, which encoded an 8-bit message. By placing three of these along the scale, we could now reliably infer the centimeter-height, and correct the skew of the camera. The fiducials encoding would include the height in centimeters, the height in meters, and finally, an error detection number to verify the result.

To detect both the fiducials and the water scale we ended up utilizing MaskRCNN, an object detection and segmentation neural network. To train these networks we couldn't simply gather enough real-world images conveniently, so we decided to simulate them instead.

Both fiducials and scales were randomly generated as 2D images, complete with filth, obscured parts and illumination variances, and set over regular images. Now we could generate thousands of images without ever leaving the office!

The pipeline in summary:

- Detect the scale and the fiducials by covering them with masks with MaskRCNN [2]

- Decipher the fiducials, and translate the middle one's pixel height into centimeter height

- Correct the skew with the three points the fiducials form, and utilize the known distance between the fiducials to project centimeter points for all pixels along the scale

- Find the lowest point on the scale mask, mark that pixel as the 'water level'

- Decipher the 'water level' pixel into centimeters, and return this result to the database